Effects of Mutual Eye Gazing in Real-time Versus Playback on Automatic Mimicry

Automatic mimicry is an important component of social interaction because it reflects the unconscious synchronization of behavior between two or more individuals. For example, when two individuals are gazing into each other’s eyes, they may involuntarily start to mimic facial expressions or start to laugh due to the sharing of emotional states. Eye contact is a form of social interaction in primates that increases the activation of brain regions such as the fusiform gyrus, anterior and posterior superior temporal gyri, medial prefrontal cortex, orbitofrontal cortex, and amygdala. Some of these regions mediate emotional and cognitive processes that help us try to interpret the feelings of others and express the appropriate response, such as empathy. The neuronal basis of automatic mimicry is attributed to the mirror neuron system, which is categorized into the parieto-frontal mirror neuron system and the limbic mirror neuron system (Cattaneo and Rizzolatti, 2009). In their eNeuro publication, Koike and colleagues used simultaneous fMRI imaging of paired individuals (hyperscanning) to test the hypothesis that mutual interaction during eye gazing could activate the mirror neuron system in brain regions involved in self-awareness.

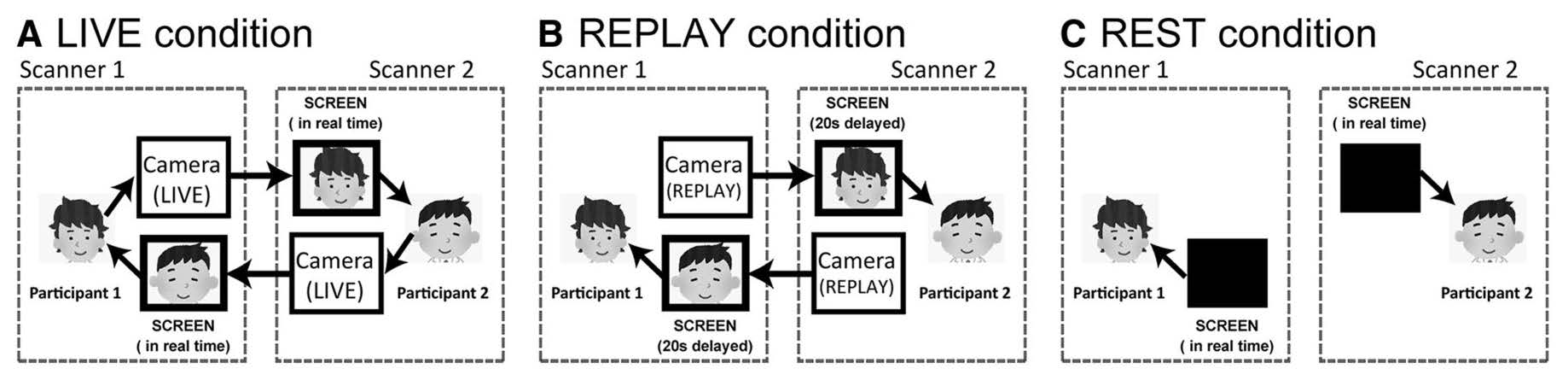

With the use of a double video system, 16 pairs of adults . In the LIVE condition, mutual eye gazing occurred in real-time. In the REPLAY condition, unbeknownst to the participants, the video of the partner’s face was presented at a 20-second time delay. In the REST condition (used as a baseline measure) the video that showed the partner’s face in the LIVE and REPLAY trials was instead a black screen. Figure 1 is a schematic of all three conditions.

Koike and colleagues use eye blinking as a measure of shared communicative cues between paired participants. The average number of eye blinks per trial was similar between the LIVE and REPLAY conditions. The authors found that participants were more responsive to the timing of eye blinking of the partner in the LIVE condition compared to the REPLAY condition. This result suggests that conscious awareness was not the main factor governing a heightened mutual attentional state between paired participants.

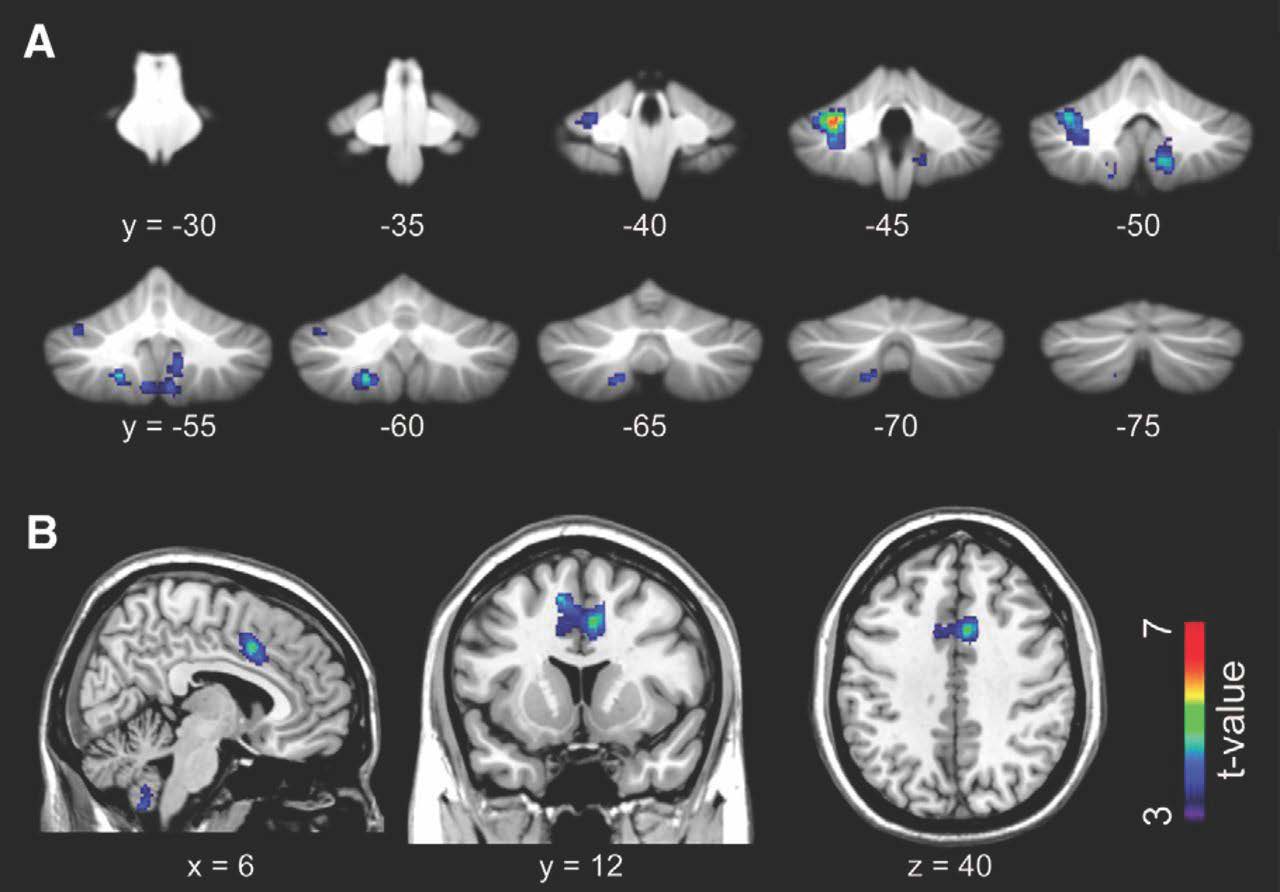

Using linear regression analyses of fMRI images, the authors found that, compared to the REPLAY condition, the LIVE condition was associated with increased activation in the cerebellum (Figure 2A) and anterior cingulate cortex (ACC; Figure 2B) and observed greater effective connectivity from the ACC towards the anterior insular cortex (Figure 5 in Koike et al., 2019). These findings highlight the involvement of the cerebellar and limbic mirror system during real-time eye gazing. Synchronous increases in activation of the bilateral middle occipital gyrus were also noted in paired participants during the LIVE condition (Figure 6 in Koike et al., 2019). This phenomenon could potentially be mediated via interactive eye blinking, but the neural basis of this observation is not known.

This eNeuro publication describes an experimental approach that deviates from classic single-subject fMRI imaging studies. Although the duration of mutual eye gazing in the study was atypical from what occurs during everyday social interactions, the study emphasizes the importance of real-time communicative cues in social interactions.

Read the full article:

What Makes Eye Contact Special? Neural Substrates of On-Line Mutual Eye-Gaze: A Hyperscanning fMRI Study

Takahiko Koike, Motofumi Sumiya, Eri Nakagawa, Shuntaro Okazaki and Norihiro Sadato

References:

- Cattaneo L, Rizzolatti G (2009) The mirror neuron system. Arch Neurol 66:557 560. doi:10.1001/archneurol.2009.41 pmid:19433654

- Diedrichsen J (2006) A spatially unbiased atlas template of the human cerebellum. Neuroimage 33:127–138.doi:10.1016/j.neuroimage.2006.05.056 pmid:16904911

- Diedrichsen J, Balsters JH, Flavell J, Cussans E, Ramnani N (2009) A probabilistic MR atlas of the human cerebellum.Neuroimage 46:39–46. doi:10.1016/j.neuroimage.2009.01.045 pmid:19457380

FOLLOW US

POPULAR POSTS

TAGS

CATEGORIES

RSS Feed

RSS Feed