Tonebox: Automated Tool for Behavioral Analyses and Potential Integration With Other Open Source Methods

Vishal Patel is a rising sophomore in Biology in the Department of Biological Sciences at Carnegie Mellon University, Pittsburgh, PA

As automation and computer algorithms continue to be improved, new and powerful tools have become useful in running high-throughput experiments in neuroscience (Rose et al., 2016; Sofroniew et al., 2016; Kuchibhotla et al., 2017; Francis et al., 2018). Automation of a variety of behavioral experiments permits the creation of large datasets that incorporate real-time measurements with contemporaneous data analysis. Automation also reduces experimenter-induced data variability and increases efficiency in a laboratory environment. When such tools are open source, creation by one lab broadens the research possibilities of many other labs. Automated open-source tools are best designed to be user-friendly, capable of use by researchers of any skill level, customizable to specific needs, and suitable for software updates or coding modifications. Small automated tools that can be incorporated within rodent housing enable behavioral recording during the day and night, which is particularly important for determining circadian influences on rodents, a nocturnal species. In their publication, Francis and colleagues introduced the “ToneBox,” an automated, open-source system for 24/7 automated auditory operant conditioning within the mouse home cage.

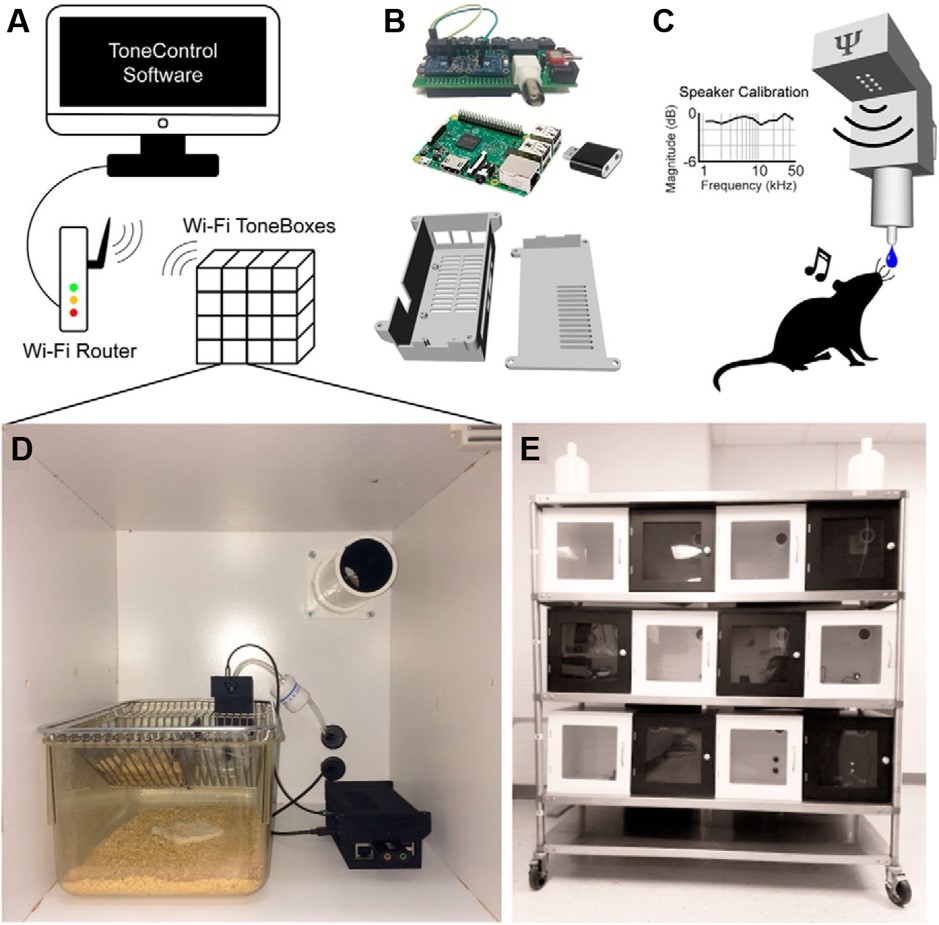

Figure 1 shows the Tonebox components that are located externally and internally with respect to the mouse home cage. The ToneBox central control unit (CCU), a small box located outside the home cage, is connected to the behavioral interface (BI) inside the home cage. The BI includes a touch-sensitive waterspout that is regulated to deliver water as a reward. A speaker mounted outside of the cage emits sound. Twelve home cages, each with sound insulation, housed 24 adult C57BL/6J mice; two same-sex littermates per cage. Through operant conditioning with positive reinforcement, the mice were trained to associate an auditory tone with a water reward. Once trained, the authors conducted a tone detection task and monitored performance across the light and dark cycles. The tone detection task was set up so there was 1s silence, 1s of tone, and 2s silence. If mice licked the spout after the target tone onset (“hit”), then they would receive water. Otherwise, a lick that occurred randomly or during silence, water was not delivered, although the tone was still presented. A lick during the first second of silence was deemed an “early” hit. The mice had to abstain from licking for 5–25s for the next trial to begin. Mice were also trained on a more challenging auditory discrimination task in which mice learned to associate a target tone frequency with a water reward.

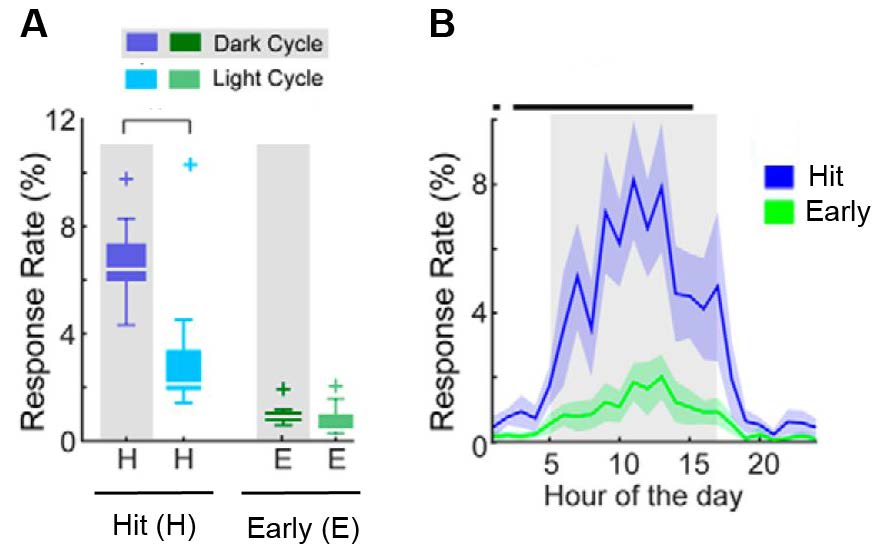

Francis and colleagues found that mice trained on both detection (Figure 2) and discrimination tasks showed consistent accuracy across both the light and dark cycles, but behavioral response rates occurred at a much higher frequency during the dark cycle. These results demonstrate that the ToneBox can accurately train a large number of mice simultaneously and efficiently to phenotype behavior. These findings confirm the capability of the Tonebox to acquire reliable results for auditory operant conditioning tasks in a large population of mice. The data also highlight how task engagement differs between dark and light cycles, which is an important consideration for the experimental design of behavioral experiments. As the ToneBox is open source, low cost, and compact, this tool provides numerous opportunities for use by other researchers to investigate research questions that may not have been previously possible to address.

Algorithms such as B-SOID (Hsu & Yttri, 2020) also serve to increase the automation of behavioral experiments. Utilizing DeepLabCut (Mathis et al., 2018), B-SOID is an unsupervised behavioral segmentation algorithm capable of autonomously extracting several types of animal behavior from video recordings. By feeding a video marked with points of interest into DeepLabCut, a comma-separated values (CSV) file can be generated with a frame-by-frame breakdown of the 3-D coordinates of rodent posture for different behavioral processes such as locomotion, nose poking, or grooming (Github video link). The power of B-SOID is its applicability to defining and even predicting distinct aspects of rodent behavior.

Potential integrated use of the ToneBox with B-SOID would significantly broaden the range of experimental questions that can be addressed. For example, mice can be placed in an open field and trained on auditory tones from the ToneBox. As the ToneBox automates behavioral training, the number of experimenter hours required to accurately train mice on tones is low. With the ToneBox software, the responses to tones can be analyzed automatically, and with B-SOID, the behavioral responses to the tones can be extracted as well.

Another example of the potential integration of open source tools would combine the Tonebox with DeepSqueak, which detects and records ultrasonic vocalizations in rodents (Coffey et al., 2019). As measurements captured by such automated tools are time-stamped, pooled data from Tonebox and DeepSqueak could provide knowledge of rodent vocalization patterns during behavioral tasks. Additionally, if there were a software system that tracks social interactions between rodents, DeepSqueak and that social algorithm could be used in combination to observe naturalistic social interactions among mice. Going further, combining Tonebox and DeepSqueak with a neural recording tool could provide valuable insight into the neural substrates that underlie social interactions in rodents.

DIY Nautiyal Automated Modular Instrumental Conditioning 70 (DIY-NAMIC) is another recently published open-source tool (Lee et al., 2020). DIY-NAMIC measures behavioral aspects of impulsivity, which has implications for several neurological disorders. Automated training of mice using DIY-NAMIC enabled the authors to capture data during the short period of adolescence in mice. DIY-NAMIC is another example of how reducing the reliance on researchers to conduct experiments, yields several benefits, including the advancement of neuroscience research.

With automated open-source tools, collecting and analyzing data is simple, and the range in scale of experiments is broad. Thus, researchers have access to tools that enable high-throughput experiments to be completed. Automated tools can identify subtle aspects of rodent behavior that experiments conducted by researchers may not detect. Therefore, open-source tools not only represent an advance in how experiments are performed but likely will be the foundation of the increased understanding of the intricacies of rodent behavior.

This Reader's Pick was reviewed and edited by eNeuro Features Editor Rosalind S.E. Carney, D.Phil.

References:

- Coffey KR, Marx RG, Neumaier JF. DeepSqueak: a deep learning-based system for detection and analysis of ultrasonic vocalizations.Neuropsychopharmacology.2019;44(5):859–68.

- Francis NA, Winkowski DE, Sheikhattar A, Armengol K, Babadi B, Kanold PO. Small Networks Encode Decision-Making in Primary Auditory Cortex.Neuron.2018 21;97(4):885-897.e6.

- Hsu AI, Yttri EA. B-SOiD: An Open Source Unsupervised Algorithm for Discovery of Spontaneous Behaviors.bioRxiv.2020 Mar 7;770271.

- Kuchibhotla KV, Gill JV, Lindsay GW, Papadoyannis ES, Field RE, Sten TAH, et al. Parallel processing by cortical inhibition enables context-dependent behavior.Nat Neurosci. 2017;20(1):62–71.

- Lee JH, Capan S, Lacefield C, Shea YM, Nautiyal KM. DIY-NAMIC behavior: A high-throughput method to measure complex phenotypes in the homecage.eNeuro.2020 Jun 19;ENEURO.0160-20.2020.

- Mathis A, Mamidanna P, Cury KM, Abe T, Murthy VN, Mathis MW, et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning.Nat Neurosci.2018;21(9):1281–9.

- Rose T, Jaepel J, Hübener M, Bonhoeffer T. Cell-specific restoration of stimulus preference after monocular deprivation in the visual cortex.Science.2016 Jun 10;352(6291):1319–22.

- Sofroniew NJ, Flickinger D, King J, Svoboda K. A large field of view two-photon mesoscope with subcellular resolution for in vivo imaging.eLife.2016 14;5.

Read the full article:

Automated Behavioral Experiments in Mice Reveal Periodic Cycles of Task Engagement within Circadian Rhythms

Nikolas A. Francis, Kayla Bohlke, and Patrick O. Kanold

FOLLOW US

POPULAR POSTS

TAGS

CATEGORIES

RSS Feed

RSS Feed